Textual Explanations for Automated Commentary Driving

Abstract

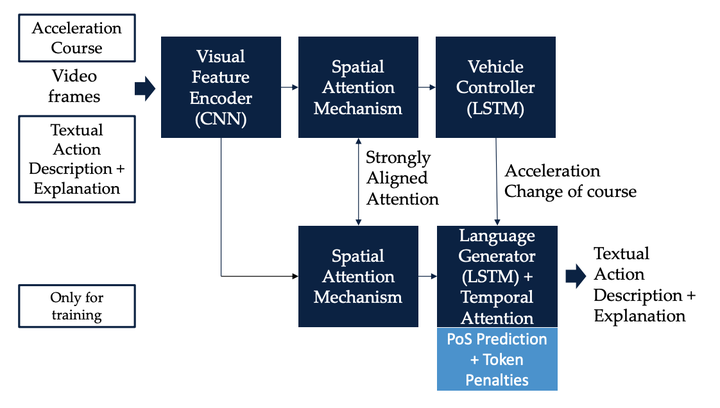

The provision of natural language explanations for the predictions of deep-learning-based vehicle controllers is critical as it enhances transparency and easy audit. In this work, a state-of-the-art (SOTA) prediction and explanation model is thoroughly evaluated and validated (as a benchmark) on the new Sense–Assess–eXplain (SAX). Additionally, we developed a new explainer model that improved over the baseline architecture in two ways; (i) an integration of part of speech prediction and (ii) an introduction of special token penalties. On the BLEU metric, our explanation generation technique outperformed SOTA by a factor of 7.7 when applied on the BDD-X dataset. The description generation technique is also improved by a factor of 1.3. Hence, our work contributes to the realisation of future explainable autonomous vehicles.