Explanations in Autonomous Driving: A Survey

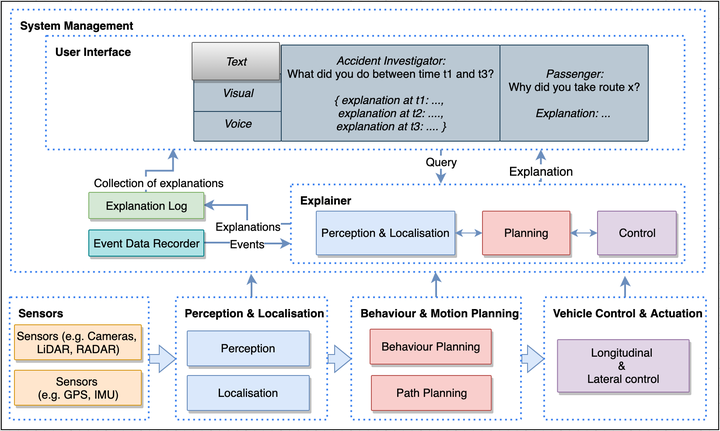

Explanation generation

Explanation generation

Abstract

The automotive industry has witnessed an increasing level of development in the past decades; from manufacturing manually operated vehicles to manufacturing vehicles with high level of automation. With the recent developments in Artificial Intelligence (AI), automotive companies now employ blackbox AI models to enable vehicles to perceive their environment and make driving decisions with little or no influence from a human. With the hope to deploy autonomous vehicles (AV) on a commercial scale, the acceptance of AV by society becomes paramount and may largely depend on their degree of transparency, trustworthiness, and compliance with regulations. The assessment of these acceptance requirements can be facilitated through the provision of explanations for AVs' behaviour. Explainability is therefore seen as an important requirement for AVs. AVs should be able to explain what they have ‘seen’, done and might do in environments where they operate. In this paper, we provide a comprehensive survey of the existing work in explainable autonomous driving. First, we open by providing a motivation for explanations and examining existing standards related to AVs. Second, we identify and categorise the different stakeholders involved in the development, use, and regulation of AVs and show their perceived need for explanation. Third, we review previous work on explanation in the different AV operations. Finally, we draw a close by pointing out pertinent challenges and future research directions. This survey serves to provide fundamental knowledge required of researchers who are interested in explanation in autonomous driving.