Towards Accountability: Providing Intelligible Explanations in Autonomous Driving

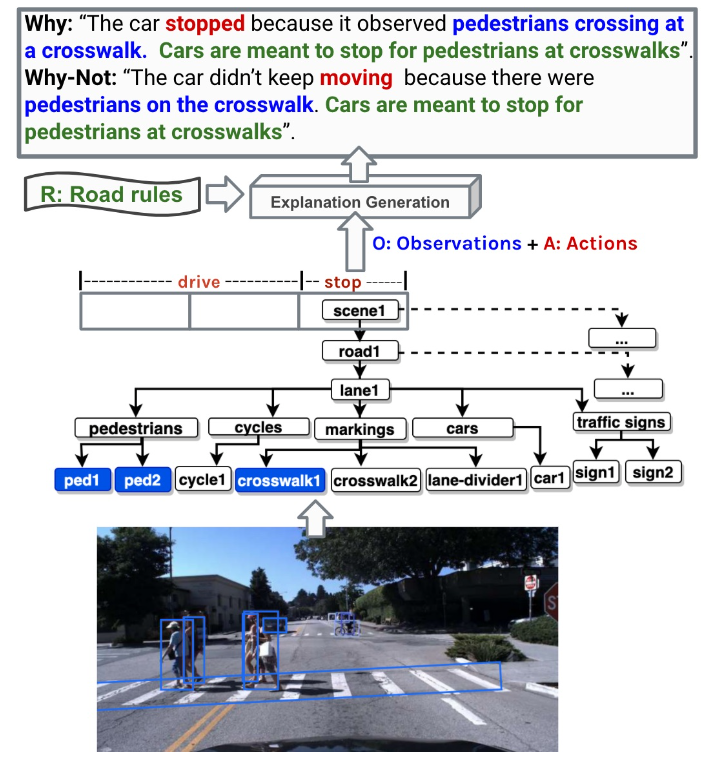

Interpretable representation for explanation generation

Interpretable representation for explanation generation

Abstract

The safe deployment of autonomous vehicles (AVs) in real world scenarios requires that AVs are accountable. One way of ensuring accountability is through the provision of explanations for what the vehicles have ‘seen’, done and might do in a given scenario. Intelligible explanations can help developers and regulators to assess AVs' behaviour, and in turn, uphold accountability. In this paper, we propose an interpretable (tree-based) and user-centric approach for explaining autonomous driving behaviours. In a user study, we examined different explanation types instigated by investigatory queries. We conducted an experiment to identify scenarios that require explanations and the corresponding appropriate explanation types for such scenarios. Our findings show that an explanation type matters mostly in emergency and collision driving conditions. Also, providing intelligible explanations (especially contrastive types) with causal attributions can improve accountability in autonomous driving. The proposed interpretable approach can help realise such intelligible explanations with causal attributions.