Context-based Image Explanations for Deep Neural Networks

Explanation generation

Explanation generation

Abstract

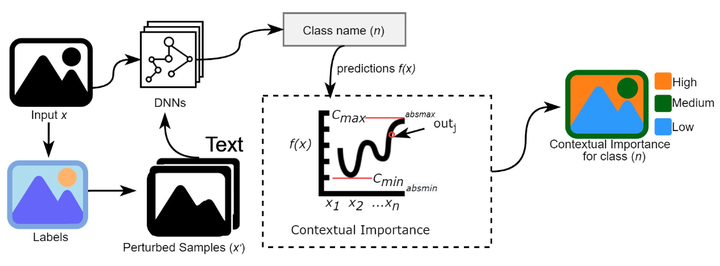

With the increased use of machine learning in decision-making scenarios, there has been a growing interest in explaining and understanding the outcomes of machine learning models. Despite this growing interest, the existing works on interpretability and explanations have been mostly intended for expert users. Explanations for end-users have been neglected in many usable and practical applications (e.g., image tagging, caption generation). It is important for non-expert users to understand features and how they affect an instance-specific prediction to satisfy the need for justification. In this paper, we propose a model-agnostic method for generating context-based explanations aiming for general users. We implement partial occlusion on segmented components to identify the contextual importance of each segment in scene classification tasks. We then generate explanations based on feature importance in a given context. We present visual and text-based explanations: (i) saliency map presents the pertinent components with a descriptive textual justification, (ii) visual map with a color bar graph showing the relative importance of each feature for a prediction. Evaluating the explanations using a user study (N=50), we observed that our proposed explanation methods outperformed existing gradient and masking based methods. Hence, our proposed explanation method could be deployed to explain models' decisions in real-world applications.